For the Animation course, we built an animation engine from the ground up in C++ and OpenGL. You first drag in a file containing a rigged mesh, meaning the mesh contains a basic skeletal system with joints. Then drag an animation file on top of the mesh to make it move. The mesh vertices are then moved with the corresponding bones. To correctly animate the skeletons, kinematics was implemented throughout the joint system. The animation file is usually loaded in keyframes. To make smooth animations, linear interpolation between te keyframes was implemented.

SenseGlove is a company developing haptic gloves, with which you can feel objects and see your hands in virtual reality. For my internship at SenseGlove, the goal was to make a physical joystick and buttons on the glove for quicker and generally compatible interactions in VR. The video above is the corresponding demonstration I created in Unity to research the user experience and give examples of its uses. The internship included prototyping the physical joystick and buttons, including contacting distributers for components, assembling the electronics, firmware and SDK implementation and creating a Unity demo. In the Unity demo, people could use one of the buttons to teleport, and another button to draw in mid-air with the color selected on the joystick. They could also edit the color of a paint roller to instantly color any full object.

In the computer vision course, we learned how to incorporate simulations into the real world and the other way around. In the first project we used OpenCV to calibrate the camera and find the orientation of the checkboard. Linking this to OpenGL, the cube is drawn after transforming it to the checkboard space. In the GLSL fragment shader, reflections to a virtual light source is simulated. The other assignment was to show 3D reconstruction from four cameras on a prebuilt C++ template. Via calibration the transformation from each camera to the room space was obtained. We extracted a mask of the subject, and turned a voxel (a 3D pixel) on when the a 3D point transformed to camera space is visible (white mask) from all four cameras. This way you get a 3D reconstruction in voxels, from which a mesh can be generated via the marching cubes algorithm.

During the robotics course we combined several techniques to make a self driving car detect obstacles and pedestrians, and steer clear of them. First the ground plane PCL points were removed using RANSAC segmentation. The objects were detected in Lidar data using the Point Cloud Library (PCL) with eucledian cluster extraction. Pedestrians were detected by feeding the locations of detected lidar objects and these with the camera as a pedestrain. This information was then send to a node which made the car steer around obstacles and stop for objects and pedestrians too close in front of the car. In a later course we compared four different feature inputs (Raw pixel values, Histogram of oriented gradients (HOG) and Convolutional Neural Network (CNN, MobileNet) in combination with different classifiers (Support Vector Machine (SVM), k-NN) for pedestrian detection. CNN consistently outperformed the others.

In the game physics course in the Game and Media technology master, we implemented rigid body dynamics, soft body dynamics and a ragdoll cloth simulation. The task was to implement rigid and soft body dynamics in the framework of our teacher, which already could detect collisions, but not solve them. For the rigid body dynamics, the CR coefficient is how elastic the collision is, the lower, the more energy gets lost. Other built in features: Including friction, air drag, interpenetration with additional rotational movement, interaction with mouse. The soft body simulation works by connecting the vertices via springs in tetrahedral structure. To quickly calculate for all springs simultaneously, the simulation uses matrices for mass, stiffness and damping constants, for which each entry in the matrix matches to a spring. Together with volume preservation forces, this yields a soft body simulation. It allows user squeezing and corotational elements. Then we have a cloth and ragdoll simulation. The simulation is built in Unity without RigidBody components (implementing physics ourselves). Due to only two weeks to build it part time, we did not get to angular constraints and inverse kinematics. The cloth is simulated as vertices connected by springs, the ragdoll as balls with distance constraints.

For the path planning and decision making course, we built a project to simulate dropping of packages on balconies in New York. The visualisation was done with CoppeliaSim (previously V-rep). We linked this to python, and modified an existing RRT* library to speed up significantly. RRT* forms a tree through 3D space by randomly placing a point, and connecting it to the closest point in the tree. It then reconnects some branches to reorganise. The speed up was achieved by searching in a cone towards the end point. It will first try to fit points in a small cone, if it cannot find a point closer to the goal within a few iterations, it will enlargen the angle of the cone. In addition to the cone, there is a box around the drone so it can find options when it is close to a building. When a path is found, the drone then flies over the path by actual drone dynamics with PID velocity control.

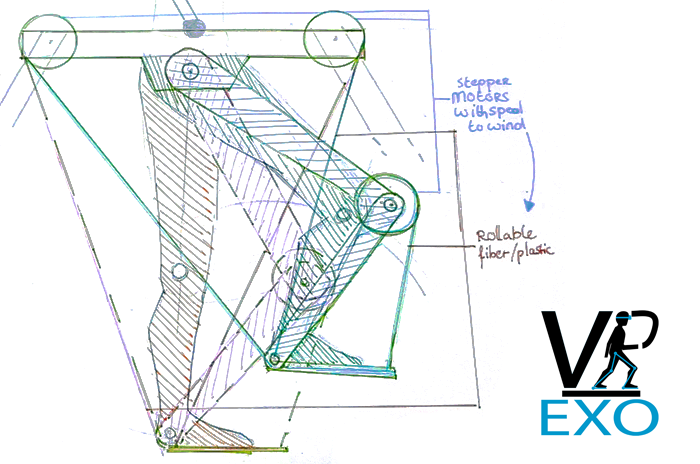

Wat if we were to make an exoskeleton for the entire body, that can be tilted as well? We would be able to simulate anything in VR. Walk, step onto things, step in a plane and fly away. Feel all the forces in a virtual world. Holotron and the AxonVR skeleton are similar to this idea, which I discovered later (see Homepage). This would also be particulary useful for training, rehabilitation and telerobotics. I spent a few weeks doing research and designing ideas to solve the locomotion problem with haptic feedback in my spare time. Since this project I tried to fully focus my education to work towards this goal. Designing an exoskeleton counteracting human movements is hard due to the high torque and speed requirements for the motors. Another problem is that you should be able to move without the motor counteracting your forces. The research I have during this time is downloadable in a PDF below. Please keep in mind that during this time I only had chemistry and 1.5 years computer science experience. Since attending haptics courses, I know what can and cannot be done, and work towards the endgoal differently.

To make a simple proof of concept I started to build a haptic feedback for the elbow joint. The idea was to have force feedback for instance for shooting a bow in VR. I never fully finished it, since there were some (solvable) issues with both electronics and welding. Thereby, I had to start my master which left little time to spend entire days in the workshop. Since doing my master, I have learned a lot and would redesign most of the exoskeleton. The above video is only a tiny selection of all the things I worked on.

A raytracer is a 3D environment that does not have real-time performance as priority, but is physically accurate. This method is mostly used in rendering software. A ray is shot from each pixel into a virtual space. The intersection with the closests object is registered, bouncing further until the final pixel color is determined. In the bachelor, with C#, implemented features are: Glass, mirrors, spotlights, anti-aliasing and triangles. The C++ whitted additionally contains Lambert-Beer, textures, area lights and multithreading (205% speedup on dual core h). The car consists of 360794 triangles. To render it fast, BVH (bounding volume hierarchy) is implemented, which divides the scene in nested boxes. During intersection checks, the algorithm can walk through the tree quickly and skip checks. The car can render on 1280x720px in 180 ms quad core hyperthreaded (no threadpool) and in 2.45 seconds single core. This is very fast for a whitted raytracer on CPU. Path tracer: Rays are rendered from light source to camera and is capable of caustics and color bleeding, which the other whitted raytracers are not. To speed this up, I implemented SIMD (AVX) to speed up the triangle intersection function.

A rasterizer is rendering a 3D environment that has real-time performance as priority. However, shadows, lighting and reflections will be less accurate. This rendering method is mostly used in games. Implemented many basic engine features from a template that could load a basic 3D meshes. One of the features implemented is a scenegraph, which enables rotation and translation with respect to parent objects and finally transforming to camera space. This was done with matrix multiplications. Other implemented features include: Pong shading, texture mapping and normal maps.

This algorithm detects the amount of fingers and position of a hand on a table with an overlay projected by a beamer. This can be used to change the projection when a hand points at a specific spot. General process: First, the image is iteratively thresholded to reduce the background noise. Then, the largest item is isolated. The convex hull and convex defects are generated. From the angles of the fingers, the amount of fingers and location is determined. The largest challenges in this project, were the occlusion of beamer light, and the varying arm length and position.

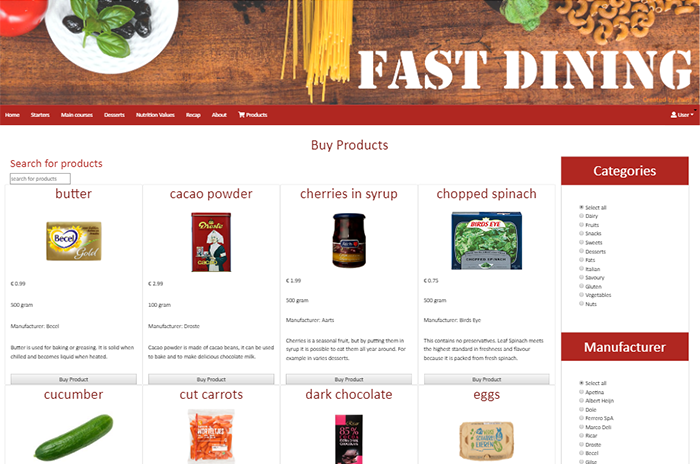

This is a small web shop made for a web technology course. The main focus was to create a functioning webshop, with both front- and backend. Users can make an account, log in, filter products and buy a product whereafter they can view it in their order history. This project was mostly focused on the back-end and less on responsiveness and looks.

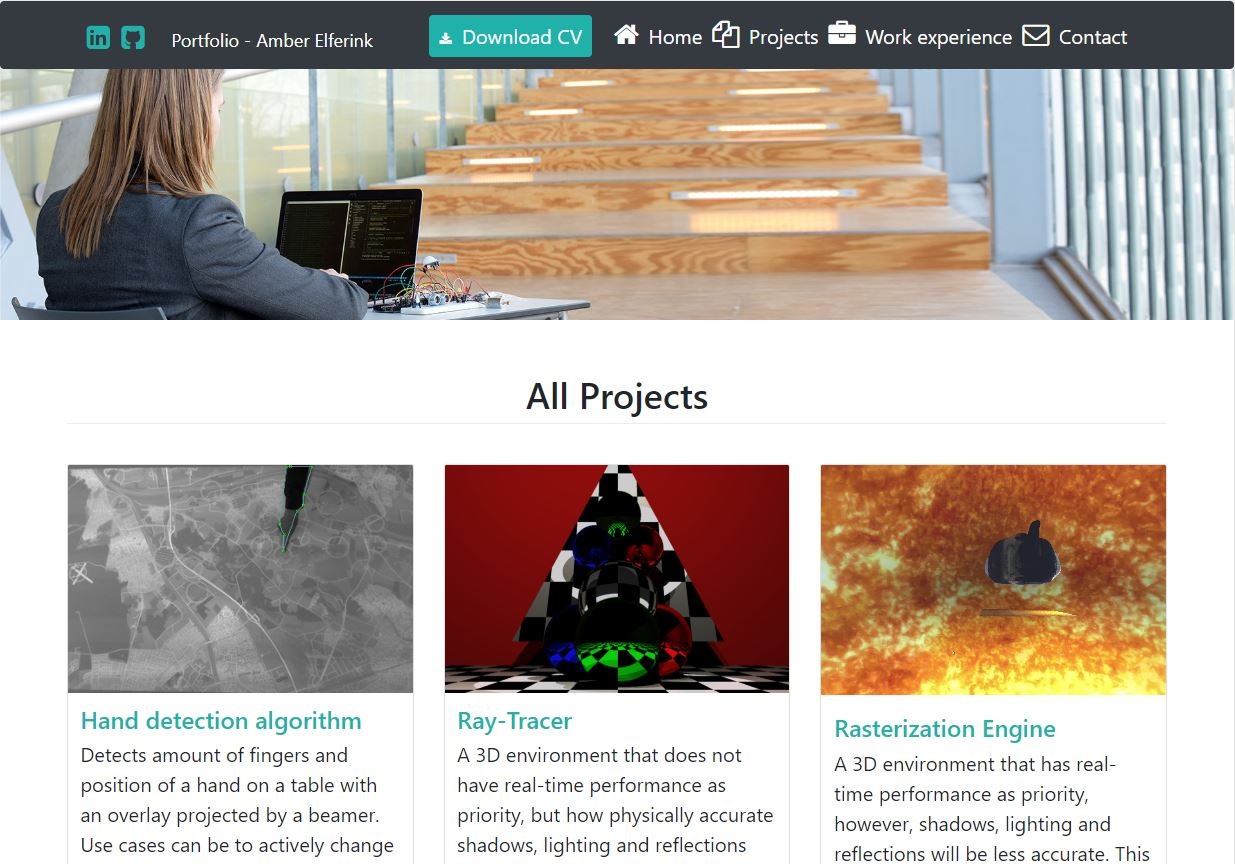

This project is the website you are currently visiting. It has been built from the ground up in NodeJS with Express as backend, borrowing some elements from bootstrap. It is a very basic website, mostly front-end oriented. I could have gone for an amazing template, or a premade wordpress site. However, I do not want a designers website, but I do want full control since templates usually do not give much customization options. I am not a designer or front-end developer, but I like to make projects functional. It is built to be responsive and simple. The website was running on a Raspberry pi webserver, which I set up myself. Since then, I have moved it to an Oracle Cloud Compute engine VPS.

The game starts when Larry, the trusty steed of the adventures, besmirches the wall of a dragons den. The players are locked into the dragons dungeon, and escaping it is their only hope to freedom. This game was made as a first year introduction project. It was made within two months with a team of seven first year students. It is a co-op puzzle dungeon crawler, where the players need to find colored keys to unlock doors and each player can only pick up keys of his own color. Some doors can be opened by levers, which a player can hold down to open doors for eachother. Along the way, players will encounter evil bunnies and pinguins they must defeat.

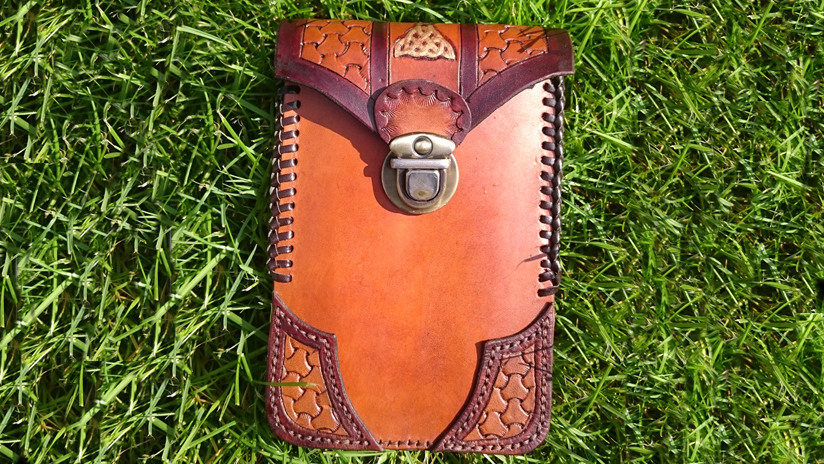

Aside from working on computers, I like working with my hands. Since pockets for women are usually too small to fit a phone, wallet and keys, I made this to solve that problem. I wear it on my belt every day. I first made the design to fit my keys, wallet and phone exactly, and then I added decorations. I bought vegetable tanned leather, stamped, cut and dyed it, after which I have sewn it together by hand. I have received a lot of positive feedback on this project and have even been approached by strangers to create a similar bag for them.